On TDD

2024-09-13

Everybody talks about TDD, but only a few know what it actually is.

And Driven Development looks like a too strong statement. TDD is a great practice which I use and recommend to use everywhere. But don't think that the entire development process is driven solely by tests.

Unfortunately, to start using TDD you need to feel how it works. Simple explanations don't work. But, to feel how TDD works you need to start using it. I'll try to solve this chicken-egg problem by demonstrating an absolutely artificial dummy example.

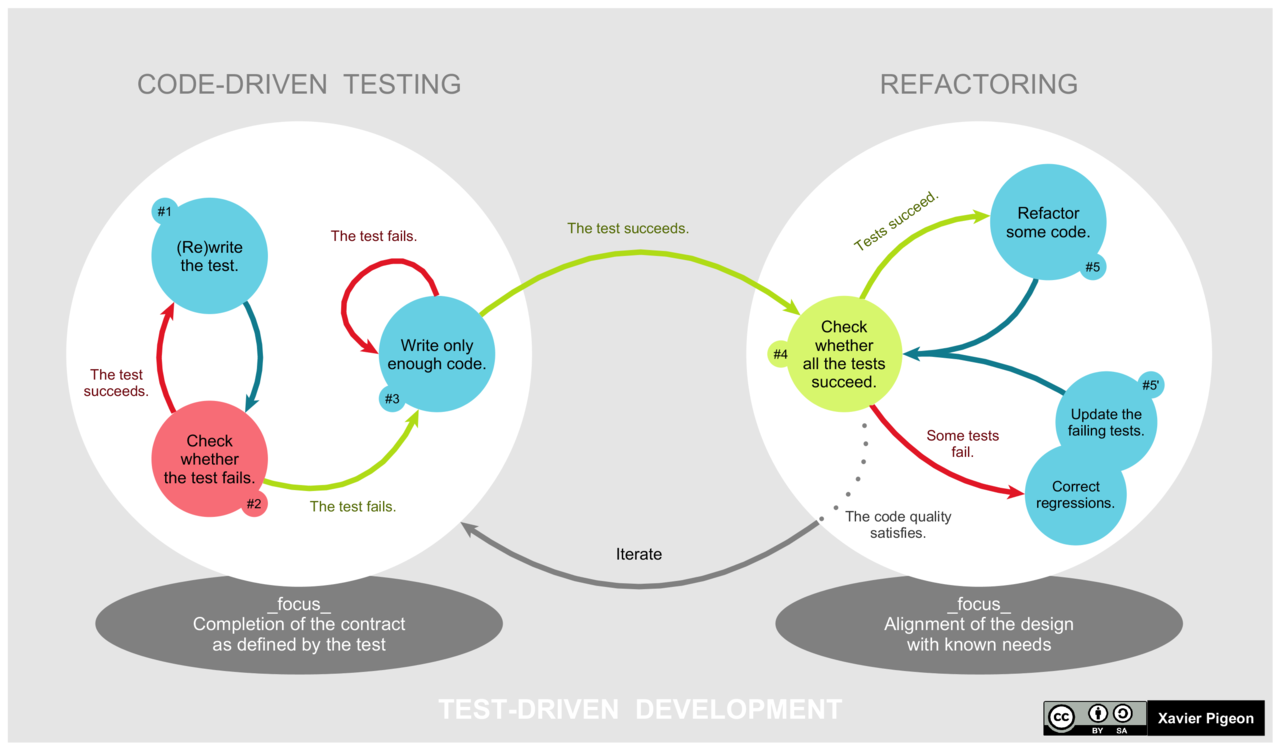

TDD is not to write tests before code. It is to write tests together with the code.

Let's create a completely useless Calculator class.

So, first we declare in the test

our intention to create a new instance of the class.

public class CalculatorTest {

Calculator calc;

@Before

public void setUp() {

calc = new Calculator();

}

}

Obviously, this test doesn't compile. Non-compiling test is a "red" test. So, we should go to the code and fix it. With minimal effort. In this case it's enough to create an empty class.

public class Calculator {

}

The test compiles now,

but it doesn't test anything.

Go to the test and add a test for addition.

Obviously,

we should test 2 + 2.

@Test

public void testAdd() {

assertEquals(4, calc.add(2, 2)); // 2 + 2 = 4

}

The test doesn't compile again,

as the calculator has no add() method.

Create the method.

You can use IDE's suggestions (with Alt+Enter).

public class Calculator {

public int add(int i, int j) {

return 0;

}

}

IDE has put return 0; as the method body.

Good stub,

not worse than any other options.

The test now compiles, but fails.

As we expect two plus two equals four,

not zero.

What is the minimal change needed

to make the test "green"?

Obviously, return 4;.

public int add(int i, int j) {

return 4;

}

That's it, the test is "green". Hooray, we've implemented the addition!

Yeah, don't worry, TDD works exactly this way. You should do minimal changes in the code which makes the tests "green".

Here the problem is not in the code, but in too few tests. Obviously, if we add one more test:

@Test

public void testAdd2_3() {

assertEquals(5, calc.add(2, 3)); // 2 + 3 = 5

}

— we need to change the code to make it obviously correct, finally:

public int add(int i, int j) {

return i + j;

}

And, of course, don't forget to check the edge cases.

@Test

public void testAddBig() {

assertEquals(Integer.MIN_VALUE, calc.add(Integer.MAX_VALUE, 1));

}

You can make the mult(), sub(), div() methods of our calculator

in the same way.

First, you write an obvious test. Then you write an obvious minimal realisation satisfying the test. Then you write less obvious tests for different input data. Then improve the realisation to satisfy all the tests. Then add tests for edge cases. You can stop when you have tests for all tricky cases which you're not too lazy to cover, and when all the tests are passing.

For example, the tricky case for division is when the divider is zero:

@Test(expected=ArithmeticException.class)

public void testDiv_0() {

calc.div(2, 0);

}

When you have the tests you can sleep well. Even better when you've paid all the taxes. If the tests are running before every deployment, nobody can break the production with a buggy code.

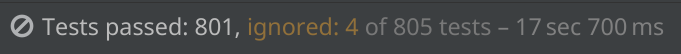

The number of tests should be enough.

Enough to sleep well.

Unfortunately,

formal coverage metrics

do not correlate precisely

with this wellness.

Even in 100% covered code there can be bugs.

And on the other hand,

there is no need to 100% cover all catch blocks

just for 100% coverage,

it's just a waste of effort in most cases.

If you find a new bug, you must write a new test to demonstrate it. It can be hard, but it's absolutely required. How will you know the bug is fixed? After a release to stage and manual testing? At least, it's unsportsmanlike. And the test cycle is too long.

The goal of TDD is to make the test cycle shorter. Here just minutes are passed from the bug demonstration till the fix.

Tests affect the code. For example, take a typical Hello World.

public class HelloWorld {

public static void main(String[] args) {

System.out.println("Hello, TDD!");

}

}

How to test it?

It's possible

if you discover how System works

and what System.out is.

It's possible to override it.

public class HelloWorldTest {

ByteArrayOutputStream out;

@Before

public void setUp() {

out = new ByteArrayOutputStream();

System.setOut(new PrintStream(out));

}

@Test

public void testMain() {

HelloWorld.main(null);

assertEquals("Hello, TDD!\n", getOut());

}

String getOut() {

return new String(out.toByteArray());

}

}

This is where mock objects are very useful.

If Hello World depends on System.out,

let's not just override it,

but mock it.

public class HelloWorldMockTest {

PrintStream out;

@Before

public void setUp() {

out = mock(PrintStream.class);

System.setOut(out);

}

@Test

public void testMain() {

HelloWorld.main(null);

verify(out).println(eq("Hello, TDD!"));

}

}

With Mockito the goal of the test becomes much more clear, isn't it?

Hello World is not a pure function. There are side effects. As printing a message. The whole program is only a side effect.

And there is an implicit dependency from System.out.

We can mock the dependencies for tests.

But it's not always possible.

Even here, we override System.out,

which is a singleton in the system.

Who knows how it may affect other tests,

especially if the tests are running in parallel.

So,

it's better to separate what we can test

from what we cannot test.

Why do we need to test how System.out.println() works?

The message is what's important.

Let's test it separately.

public class HelloWorld2 {

public static void main(String[] args) {

System.out.println(getHello());

}

static String getHello() {

return "Hello, TDD!";

}

}

And now the test becomes obviously stupid:

public class HelloWorld2Test {

@Test

public void testGetHello() {

assertEquals("Hello, TDD!", HelloWorld2.getHello());

}

}

This is how tests can affect the code. Simplifying it. Or making it more complex, depending on the point of view :)

Another common question about the tests: What should be tested, contract or implementation?

The idea is to create a single test for the contract, expressed, for example, as a Java interface. (Forgetting that a Java interface cannot be a strong contract.) And then to apply the test to all possible implementations of this interface.

Let's say, I have this interface:

public interface MessageStorage {

void save(Message message);

}

And three implementations. The first one saves a message to Redis. Another one saves to Postgres. The third one saves to both Redis and Postgres.

What to test here, in this interface? Again, as in the example with Hello World, there are only side effects.

Okay,

the implementations definitely have some dependencies on some repositories,

which writes to Redis and Postgres.

And these dependencies,

I hope,

are injected in the constructor.

We can test

that during the save() call

the methods of these repositories are also called.

But different implementations may have different constructors.

Do all the repositories have the same interface

to mock them?

Etc, etc...

By the way,

we can find one important thought here.

The Redis and Postgres implementations may implement the MessageStorage themselves.

And,

instead of Redis+Postgres implementation,

we can make a universal implementation,

which saves the message into a list of other MessageStorage implementations.

public class RedisMessageStorage implements MessageStorage {

//...

}

public class PostgresMessageStorage implements MessageStorage {

//...

}

public class ComposedMessageStorage implements MessageStorage {

public ComposedMessageStorage(List<MessageStorage> nested) {

//...

}

//...

}

For each of these implementations,

we have to write its own test.

Because the dependencies,

writing to Redis or Postgres,

are different.

However, we invented ComposedMessageStorage

which can be useful somewhere else.

TDD is mostly about unit tests because it's necessary to quickly switch between writing tests and code. However, there are some things you cannot check with unit tests.

A typical example: SQL queries. With unit tests you can (and must) check what you make (as you think) a correct SQL. But you cannot check that this SQL does actually work in real DBMS. At least, the set of tables and data may differ. Or, for example, Amazon Redshift looks like PostgreSQL 8, but actually differs significantly.

To fully check the SQL query execution you need integration tests. You can, for example, run Redis and Postgres in Docker containers, apply necessary migrations, add some necessary data, and call your methods. And then check that necessary data are changed in an expected way. It's long, hard, but it works. And it's very useful.

Can you consider integration tests being a part of TDD? Why not?

Keep the bar green! (And it's not about a bar and green cocktails)

P.S. The examples from this article can be found in the repository. Check the history.